Rootcat Easteregg - The Hunt for red S3

This is the walkthrough and explanation for the S3 realistic misconfiguration used in the recent easter egg hunt on this blog. Read the rules and info here on my twitter thread.

In short: On this blog, I hid some ascii kittens. Some were just for fun, others gave hints or contained information to find an S3 bucket. Finding the information for the S3 bucket is referred as phase one. The bucket itself had a security vulnerability I encountered in my real-world engagements and that I know a lot of people are not aware of, which is why I set it up this way so I could eggsplain it here. In the bucket itself was a jpeg file, retrieving this file is phase two and fulfills the prophecy and ends the easter egg hunt.

Phase one

So, first step was basically finding my blog (lol) and then throwing random URLs at it. Some like /admin delivered an easter egg kitten, while others basically said that scanning is not much use here, like the scanning_kittens, which were prompted early on in the wordlist, like the dirsearch kitten:

To achieve phase one, a kitten somewhat connected to aws creds needed to be found. The one found by the participants (there was one more no one found) was located under /aws/config:

It could mean isroot or isloot depending on the applied UwU. By checking the URL /uwu one could find more on this, but it was not really needed:

All of this left candidates for the bucket name like

number.isloot

numberisroot

number-isloot

….

By trial and error one would find the correct bucket name was numberisloot, located in Frankfurt, and could begin phase two.

Phase two

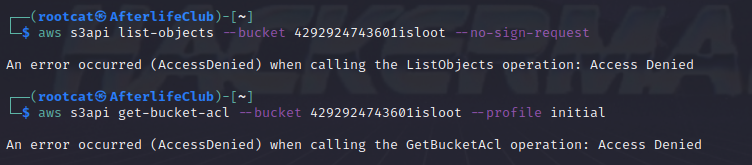

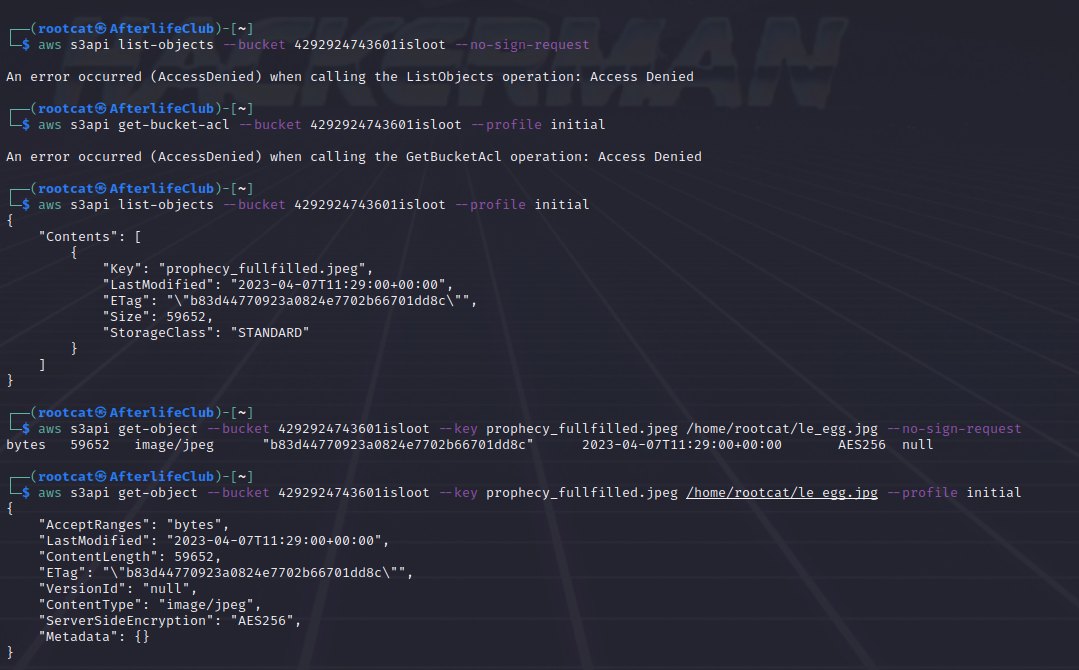

Using the aws cli one would typically try to see if the bucket is puplic accessible and if the access control lists (acl) could be read out. The option –no-sign-request tells the aws cli to not send any credentials, otherwise the default creds stored in /.aws are used, or a specific set of keys is given via the option –profile name. In this specific case there are no keys associated to the easter egg S3 bucket, so basically one would use ANY valid aws keys.

As it can be seen it is not possible to list the bucket objects without creds. It is also not possible to show the acl of the bucket, with random but valid aws creds. Access denied, you say so the bucket is not public you say, well its never that binary I say ….

Because in this case it is possible to list the bucket contents, with ANY valid aws creds, but not when none are provided, even though they are not at all connected with the S3.

The object which is known by name after the listing can then be retrieved, with or without creds:

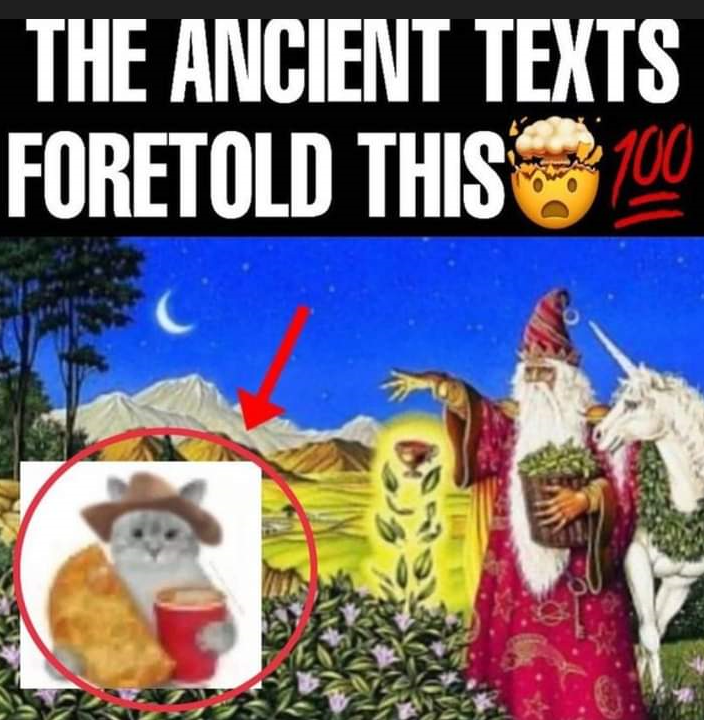

And this fulfills the prophecy, when the file is viewed (two people achieved this):

Wait what?

How does this work, how does this happen, what does public even mean then? Well, it’s complicated.

I will simplify here, but basically there is access control on bucket (the S3) and on object level (the jpeg), and there is permission based on resources and based on let’s say (not really correct) users.

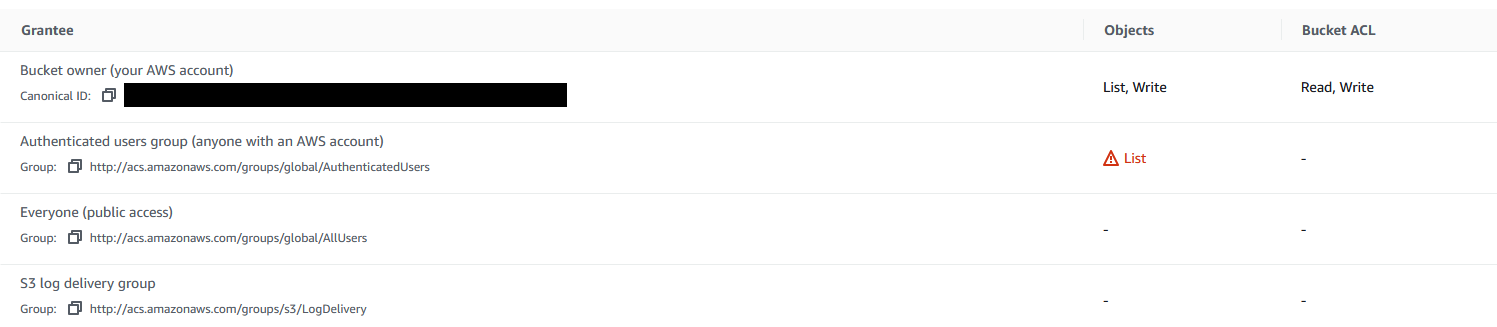

This is the acl of the bucket:

Okay this explains why we can’t list or look at the acl itself without creds (puplic). It says we can list the objects as “authenticated user groups” and says this can be anyone with an aws account. Even though it says anyone right there this happens a lot, because people think public is blocked and somehow assume that “authenticated user groups” means some sort of check, but there is none, ANY valid aws keys will work.

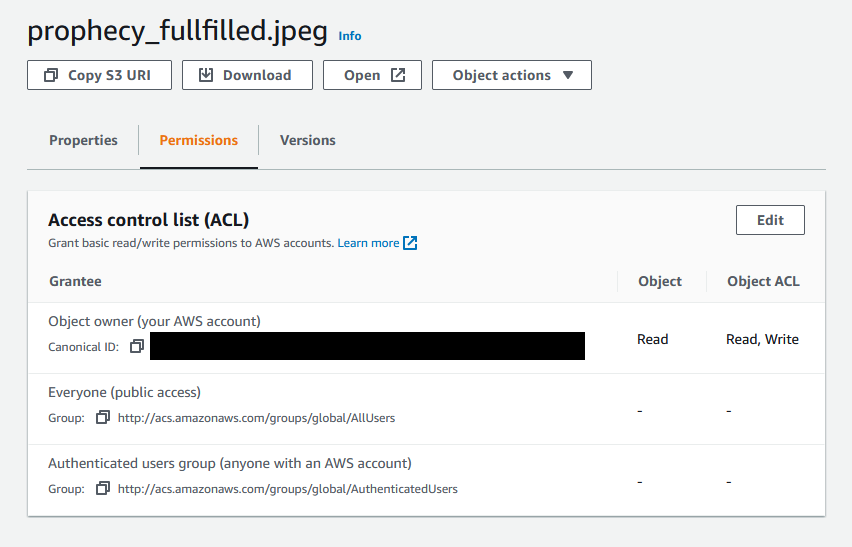

Let’s look at the object acl:

So, this states only the owner of the bucket can view/read the object. Wait what, but it was pawsible to download it with random but valid aws keys and also with none at all (–no-sign-request). This makes no sense, right?

Well yes, but actually no, because there is also permissions. The object itself has an attached permission document, let’s have a look:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::xxxxxxxxxxxxisloot/prophecy_fullfilled.jpeg",

}

]

}

This allows that the specific action of s3:GetObject is allowed on the object with the object key prophecy_fullfilled.jpeg. “Principal”: “*”, means this can be done by anyone.

Here one could write a specific aws account or service or so on, however, in reality a lot of these wildcard permissions are found.

Mainly due the following reasons:

They were copied from somewhere.

They always work when it’s a wildcard.

The service that needs access is not yet known.

It was like this during dev.

It was changed but then monitoring/webapp/database didn’t work anymore.

The service (e.g., lambda) is spawned on demand and it’s a real challenge to know its name due to randomization.

….

There are many many more of these common problems that arise from the complexity that is aws permissions and also I hope you learned that when you ever see someone doing aws testing and not throwing random keys around, this person has a lot to learn. Send em to this post :)